NVIDIA A100 Tensor Core GPU

The best acceleration for your data center

With unprecedented acceleration, the NVIDIA A100 Tensor Core GPU serves as the foundation for the most powerful elastic data centers in AI, data analytics, and HPC.

Leverage the latest NVIDIA Ampere architecture with up to 20x performance over previous generations. A100 is available in 40GB and 80GB memory versions.

Suitable for all workloads

Deep Learning training

The complexity of AI models is increasing rapidly - and so are the demands on computing power.

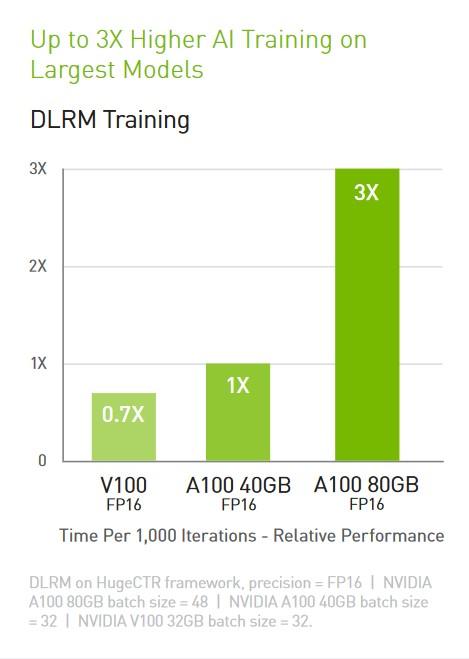

NVIDIA A100 Tensor Cores with Tensor Float (TF32) precision deliver up to 20x more performance over NVIDIA Volta, requiring no code changes to do so and providing an additional 2x boost with automatic mixed precision and FP16. For the largest models with massive data tables such as Deep Learning Recommendation Models (DLRM), the A100 80 GB achieves up to 1.3 TB of unified memory per node and provides up to 3x more throughput than the A100 40 GB.

Inference for Deep Learning

The A100 introduces breakthrough features to optimize inference workloads. Multi-instance GPU (MIG) technology enables multiple networks to run concurrently on a single A100 GPU for optimal use of compute resources. In addition to the A100's other inference performance enhancements, structural low density provides up to 2x more performance.

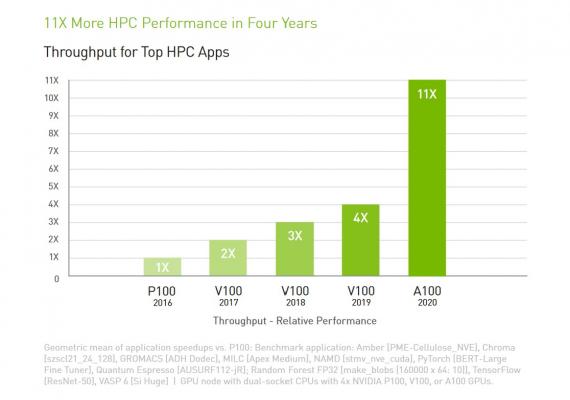

High-Performance Computing

Top performance for tomorrow's simulations and discoveries.

NVIDIA A100 introduces double precision Tensor Cores, representing the biggest performance leap for HPC since the introduction of GPUs. Combined with 80 GB of the fastest graphics memory, researchers can reduce a previously 10-hour, double-precision simulation on A100 to less than four hours.

Powerful data analysis

Analyzing and visualizing data sets can be slowed down by previous scaling solutions built on multiple servers. A100 accelerated servers deliver the computing power needed in the form of memory, storage bandwidth and further scalability. In a big data analytics benchmark, the A100 80GB achieved 83x higher throughput insights than CPUs and 2x higher performance than the A100 40GB, making it ideal for increasing workloads with ever-growing data sets.

The benefits

Any Questions?

If you would like to know more about this subject, I am happy to assist you.

Contact us